On April 4, 2019 the DC chapter of the IEEE Computer Society Chapter on Quantum Computing (co-sponsored by Nanotechnology Council Chapter) met to see a presentation by and IBM researcher named Dr. Elena Yndurain on the subject of recent efforts by that company in the realm of quantum computing. I was fortunate enough to be able to attend. I was hoping the presentation would be technical enough to be able to better understand the basics of quantum computing in the sense of a future time-line of when this new technology would be ready for the market place as defined during the course of my own research (Jordan, 2010) which is to say that a working prototype would be ready for full-scale testing. I was disappointed.

During the set-up for the real purpose of the talk, the presenter stated that the phases of quantum computing could be thought of as being in three phases of increasing complexity: (a) quantum annealing; (b) quantum simulation; and, (c) universal quantum computing. Ultimately, the goal would be (c). But the current state of the technology is (a).

It was also stated that there were essentially three possible technologies for quantum computing: (a) super conducting loops; (b) trapped ions; and, (c) topological braiding. Both (a) and (c) require cryogenic cooling. The IBM device uses technology (a) that is cooled down to 15 miliK0 (whew!). Technology (b) involves capturing ions in an optical trap using lasers. This technology operates at room temperature but suffers from a signal-to-noise problem that (a) does not. Technology (c) was not discussed.

The IBM device is a 50-qubit machine. The basic functionality of the device is predicated on Shor’s algorithm (Shor’s algorithm, 2019) and Grover’s search algorithm (Grover’s algorithm, 2019). These mathematical algorithms were developed during the 1990s. They are complex functions so there is a real part and an imaginary part. When queried the presenter stated the gains achieved by this so-called quantum annealing device were from the simplicity of the computation not the speed of the processor. The presenter went on to say that the basic algorithms had been coded in Python (Python (programming language), 2019).

That the IBM device is based on a 50-qubit processor struck me as being a bit coincidental. Recall from my first post on this subject, there has been an effort (by some unidentified group) to develop a fault-tolerant 50-qubit device since 2000. As of the publication of the paper this had not been achieved (Dyakonov, 2019). When I asked about this, the presenter simply stated that the IBM device was fault-tolerant but declined to offer any specific statistically based response. It should be stated that, during the presentation, Dr. Yndurain remarked that information included was cherry-picked [my words, not hers] to put things in the best light. Why?

During the presentation, what became clear is that IBM is building an ecosystem around the 50-qubit device. They have rolled this thing about as the “Q” computer. In order to gain access to the device, researcher must “subscribe” to the IBM service or simply “get in the que”. One also has to go through a training/vetting process to be able to develop the particular program the researcher needs to solve a particular problem. Seriously?

It seems to me this leaves two fundamental questions on the table: (a) will quantum computing be the next great disruptive innovation that supplants silicone dioxide (Schneider, The U.S. National Academies reports on the prospects for quantum computing, 2018) (Schneider & Hassler, When will quantum computing have real commercial value? Nobody really knows, 2019) (Simonite, 2016); (b) What was the point of the presentation?

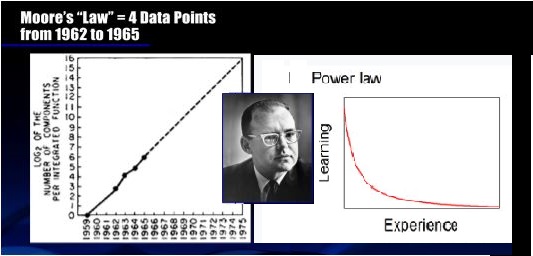

My answer to the first question is that I remain skeptical. When queried, the presenter said that the materials used were proprietary and would not be available for use by the audience. I will also say that there was a notable lack of specific information in the presentation materials that could be verified. This suggests the answer to the second question: the point of the presentation was a sales pitch. IBM seems to be building an ecosystem around this 50-qubit device that will solidify market share for what was admittedly the very earliest stage of quantum computing. IBM seems to be continuing in the tradition of Moore’s law being a social imperative not a physics-based phenomenon.

References

Dyakonov, M. (2019, March). The case against quantum computing. IEEE Specturm, pp. 24-29.

Grover’s algorithm. (2019, April 5). Retrieved from Wikipedia: https://en.wikipedia.org/wiki/Grover%27s_algorithm

Jordan, E. A. (2010). The semiconductor industry and emerging technologies: A study using a modified Delphi Method. Doctoral Dissertation. AZ: University of Pheonix.

Python (programming language). (2019, April 7). Retrieved from Wikipedia: https://en.wikipedia.org/wiki/Python_(programming_language)

Schneider, D. (2018, Dec 5). The U.S. National Academies reports on the prospects for quantum computing. Retrieved from IEEE Spectrum: https://spectrum.ieee.org/tech-talk/computing/hardware/the-us-national-academies-reports-on-the-prospects-for-quantum-computing

Schneider, D., & Hassler, S. (2019, Feb 20). When will quantum computing have real commercial value? Nobody really knows. Retrieved from IEEE Spectrum: https://spectrum.ieee.org/computing/hardware/when-will-quantum-computing-have-real-commercial-value

Shor’s algorithm. (2019, April 7). Retrieved from Wikipedia: https://en.wikipedia.org/wiki/Shor%27s_algorithm

Simonite, T. (2016, May 13). Morre’s law is dead. Now what? Retrieved from MIT Technology Review: https://technologyreview.com