In the process of creating the special acknowledgement for all the innovation throughout history that resulted in AI, GenAI more specifically… I was lured to getting my fav GenAIs to work on the case. WoW!

In the process of creating the special acknowledgement for all the innovation throughout history that resulted in AI, GenAI more specifically… I was lured to getting my fav GenAIs to work on the case. WoW!

Book(s) Release Today Thurs, June27th ! ! ! … Human + Artificial Intelligence ... Special promotion price! $1.99 !

UPDATE: #1 BEST SELLER in multiple categories in the USA. International is still counting… See links below… ! 🙂

Today is the BIG launch of latest Refractive Thinker® book, an Anthology with a dozen authors, including yours truly! Today we welcome Vol XXV: Artificial Intelligence The New Frontier of the Digital Age—the 27th volume in the #1 International Best Selling series for and by doctoral scholars from around the globe.

Refractive Thinker eBook for the USA. https://www.amazon.com/dp/B0D7QR183G As of June 29, this promotion is still active… aiming for International best seller status! …

These are the GenAI prompts used to gather information about intellectual property (IP) and the world of artificial intelligence (AI), i.e., IP+AI. This is part of our Regenerative AI project; recreate as needed, when needed, with the GenAI engines available to you at that time. Select results from various Generative AI engines (ChatGPT 4.0, Gemini, Claude, Copilot). Look at writing and analysis of Human + Artificial Intelligence by Hall and (Hall & Lentz, 2024) over at ScenarioPlans.com (alias to DelphiPlan.com).

You the reader/user can recreate the prompts as needed, when needed, with the GenAI engines available to you at that time. Note that a couple prompts are included with multiple GenAI engines for comparison.

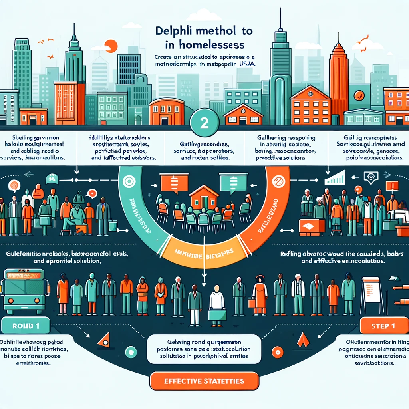

These are the prompts used to gather information about homelessness and to develop a Delphi Method study to research causes and possible interventions (locally). This is part of our Regenerative AI project; recreate as needed, when needed, with the GenAI engines available to you at that time. (Reprinted with permission from authors at NonprofitPlan.org and some adaptation here.)

Select GenAI results as of April 2024 of Delphi Research on Homelessness (from ChatGPT 4.0, Gemini, Claude, and Copilot) are available in this pdf document.

What does GenAI have to say about the scientific-based solutions promoted by Project Drawdown? ProjectDrawdown.org

<This Article is modified and reproduced from SustainZine.com, with permission. The discussion related to scenario planning is added here.>

Happy Earth Day ! GenAI and I had a talk today about Earth Day… Over at our sister site on sustainability: SustainZine.com … The Earth Day 2024 posts are divided into: Overview, Part 1, and Part 2 .

There’s a simple quiz on this Earth Day (Plastics). See what my fav GenAI chatbots had to say about getting to zero carbon emissions. What if GenAI were a world leader? Hmmm…

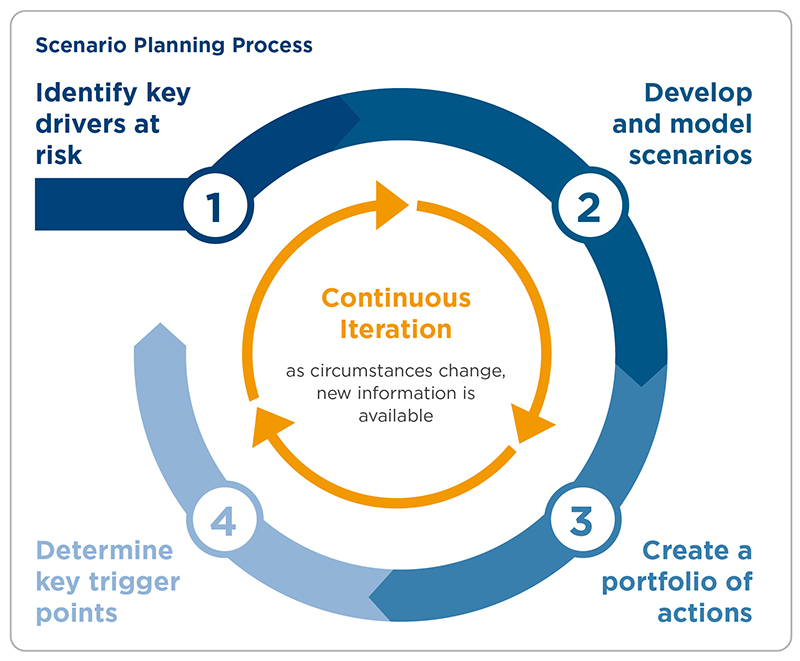

The scenario planning that Earth Day (and long-term sustainability) imply are dramatic. The cost estimates of doing nothing — well, doing more of the same (business as usual) — continue to escalate. There’s probably no industry in any country that doesn’t have to include climate change in their scenario planning.

=> The Earth Day using GenAI is part of our ongoing project to create Regenerative Dynamic (RegenD) Articles that combine Human + Artificial Intelligence (H+AI)… Along these lines, look at the variations of the Sustainability WikiBook which is a dynamic book outline on sustainability topics that links to major Wikipedia pages. the first WikiBook has an introduction that was last updated in 2018. There’s a blog post related to getting GenAI to dynamically produce such a Wikibook with Wikipedia links.

#EarthDay #earthdayeveryday #Sustainability #ReduceReuseRecycle #ScenarioPlans #DelphiPlan #H+AI #RefractiveThinker

(First published on IntellZine on April 4, 2024, https://www.intellzine.com/2024/04/disruptive-innovation-why-ai-will-spark.html … Repeat here with permission.)

The innovation and investment guru Cathy Wood gave a TED talk in December 2023 about 5 pivotal innovation platforms that will (continue) to change the world as we know it. Her group expects to see exponential sustained growth that is fueled by the productivity gains in these areas, especially where they converge. The 5 platforms (AI, Robotics, Energy Storage, DNA sequencing, and blockchain) are described below, with AI in the center. One example she uses is the rapid move to self-driving taxis. TED talk with Cathy Wood, Why AI Will Spark Exponential Economic Growth. (2023, Dec. 18).

We are working on a Regenerative Dynamic approach to articles and blogs. Using Generative Artificial Intelligence (GAI), we provide the prompts that produced the answers (blog, table, graphic, etc.). This way the reader can regenerate and extend what we produce in the hyper-fast changing world of AI. A month from now, or a year from now, with a different GenAI, the results may be different. Also, there are often links to Wikipedia where the pages are continually updated.

YOU: Besides ChatGPT and Google Gemini, what other major platforms use GenAI? Do Samsung and IBM have similar AI products?

This site has now taken a dive into AI, Generative Artificial Intelligence (GAI) in this case. AI Assistants are everywhere now, and proliferating. In search(ish) there is Google’s Gemini (formerly Bard); in Microsoft there’s Copilot which utilizes OpenAI’s technology. And, of course there OpenAI’s ChatGPT itself, with the open source version available to anyone who has the time and money to obtain data, pre-train, and implement their own GAI system — typically for a more specific application like internal customer service.

There is a new heading on DelphiPlan.com (ScenarioPlan.com) that addresses Delphi + AI, or Scenario + AI… https://delphiplan.com/delphi-ai-primer/

A seminar for nonprofits included discussion of scenario planning as it pertained to the Great CIVID Pandemic. It wasn’t so much scenario planning as we use it here on ScenarioPlans.com, as best, worst, and most-likely case (but more on that later). Imagine the nonprofit, out on thin ice, as the world’s economy went into lockdown. What if all funding froze up, even the promises of commitment? What if we are over-whelmed (under-whelmed) with customer needs? What if we have to cease operations (for an indefinite time)?

An excellent article with tools is at Bridgespan: Nonprofit Scenario Planning During a Crisis. (Image is from Bridgespan.)

Powered by WordPress & Theme by Anders Norén