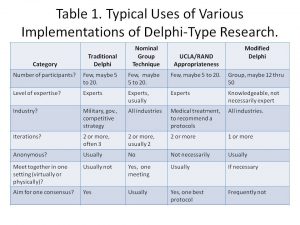

Consensus continues to be a big issue is designing a Delphi Study. It is more than a little helpful to figure out how the results will be presented and how consensus will be determined. Even if consensus is not really necessary, any and all Delphi studies will be looking for the level of agreement as a critical aspect of the research. Look at our prior blog article Consensus: Let’s agree to look for agreement, not consensus. Hall (2009) talks about suggested approaches to consensus in the Delphi Primer including the RAND/UCLA approach used in medical protocol research. Hall said: “A joint effort by RAND and the University of California is illustrated in The RAND/UCLA appropriateness method user’s manual. (Fitch, Bernstein, Aguilar, Burnand, LaCalle, Lazaro, Loo, McDonnell, Vader & Kahan, 2001, RAND publication MR-1269) which provides guidelines for conducting research to identify the consensus from medical practitioners on treatment protocol that would be most appropriate for a specific diagnoses.”

In the medical world, agreement can be rather important. Burnam (2005) has a simple one page discussion about the RAND/UCLA method used in medical research. The key points by Burnam and the RAND/UCLA are:

- Experts are readily obvious and selected by their outstanding works in the field. They may publish research on the disease in question and/or be a medical practitioner in the field (like a medical doctor).

- The available research is organized and presented to the panel.

- The RAND/UCLA method suggests the approach/method to reach consensus.

- The goal is to recommend an “appropriate” protocol.

Appropriate is clear. Burnam says, “appropriate, means that the expected benefits of the health intervention outweigh the harms and inappropriate means that expected harms outweigh benefits. Only when a high degree of consensus among experts is found for appropriate ratings are these practices used to define measures of quality of care or health care performance.”

Burman compares and contrasts the medical protocol with an approach used by Addington et al. (2005)that includes many other factors (stakeholders). Seven different stakeholder groups were represented, therefore the performance measures selected by the panel to be important represented a broader spectrium. The Addington et al. study included other performance measures including various dimensions of patient functioning and quality of life, satisfaction with care, and costs.

Burman generally liked the addition of other factors, not just medical outcomes, saying that she applauds Addington et al. “for their efforts and progress in this regard. Too often clinical services and programs are evaluated only on the basis of what matters most to physicians (symptom reduction) or payers (costs) rather than what matters most to patients and families (functioning and quality of life).”

The two key take-aways from this comparison for researchers considering a Delphi Method research. Decide in advance how the results will be presented, and how consensus will be determined. If full consensus is really necessary – as in the case of a medical protocol – then fully understand that at the beginning of the research. Frequently, it is more important to know the level of importance for various factors in conjunction with the level of agreement. In business, management, etc., the practitioner can review the totality of the research in order to apply the findings as needed, where appropriate.

References

Addington, D., McKenzie, E., Addington, J., Patten, S., Smith, H., & Adair, C. (2005). Performance Measures for Early Psychosis Treatment Services. Psychiatric Services, 56(12), 1570–1582. doi:10.1176/appi.ps.56.12.1570

Burnam, A. (2005). Commentary: Selecting Performance Measures by Consensus: An Appropriate Extension of the Delphi Method? Psychiatric Services, 56(12), 1583–1583. doi:10.1176/appi.ps.56.12.1583

Fitch K., Bernstein S.J., Aguilar M.D., Burnand, B., LaCalle, J.R., Lazaro, P., Loo, M., McDonnell, J. & Vader, J.P., Kahan, J.P. (2001). The RAND/UCLA appropriateness method user’s manual. Santa Monica, CA: RAND Corporation. Document MR-1269. Retrieved July 3, 2009, from: http://www.rand.org/publications/

Hall, E. (2009). The Delphi primer: Doing real-world or academic research using a mixed-method approach. In C. A. Lentz (Ed.), The refractive thinker: Vol. 2. Research methodology (2nd ed., pp. 3-28). Las Vegas, NV: The Lentz Leadership Institute. (www.RefractiveThinker.com)