A Recession is destructive innovation. It has accelerated, for example, the Amazon effect of online sales and purchases with the closure of some 29 retailers. The recession of the 2020 Pandemic is different in some respects, straining local restaurants and bars, even the best of local.

Category: Strategic Planning Page 2 of 3

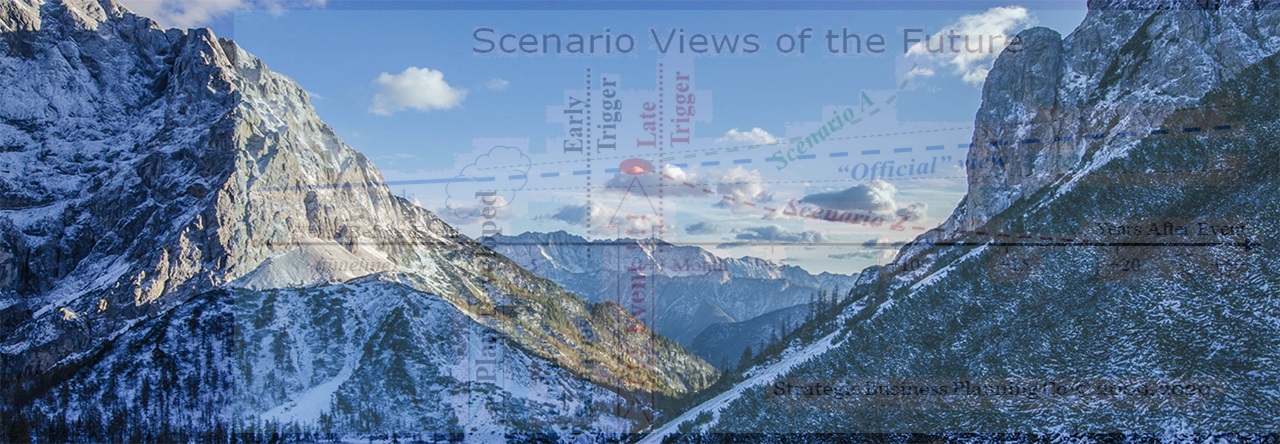

We talked about how scenario planning would and should have help see this pandemic, and have early warning signs for continuing plans.

Oh My God!

Trumps administration completely stopped the PREDICT program that did USAID training and response world-wide for pandemics. Since the Bird Flu of 2005 (H5N1), the US presidents (Bush II and Obama) have moved toward building a program to identify potential pandemics and to help countries (including the USA) deal with such an eventuality. Of course, the PREDICT program got to deal with several pandemic-type events including SARS, MERS, Ebola and even Zika (mosquito). The idea, which apparently worked very well, is to fight a pandemic where it originates in other countries, so that you don’t have to fight it here in the USA. Of course, the train-the-trainer program would be developed and applied here in the USA.

Scenario planning should be back in focus. We go a few years – 10 years now since the Great Recession – and we think that the current trajectory, or the “Official View”, should be consistent this time. But the corona virus brings that all back into focus, even though people are probably not taking it as seriously as they probably should. You have to look at the entire supply chain forward and backward. China plays a major role in many of the world’s supply chains. End consumers on the one hand; production supply chain on the sourcing side. If factories are closed, if people can’t go to work, if people don’t go out and buy the consumption and the supply chain get continually interrupted. China is initiating all kinds of stimulus. Telling banks to be forgiving on impacted factories seems like a good idea; no one wants the factories to go out of business because of such an exogenous event such as the virus. But other stimulus will be rather useless.

Probably no one knows, yet, how this epidemic will play out. There’s no reason to believe that this won’t be rather long and protracted for China. The consequences for China will ripple throughout the world. With a world that is densely (over) populated, there is no reason to believe that such outbreaks will not happen other places, and more frequently.

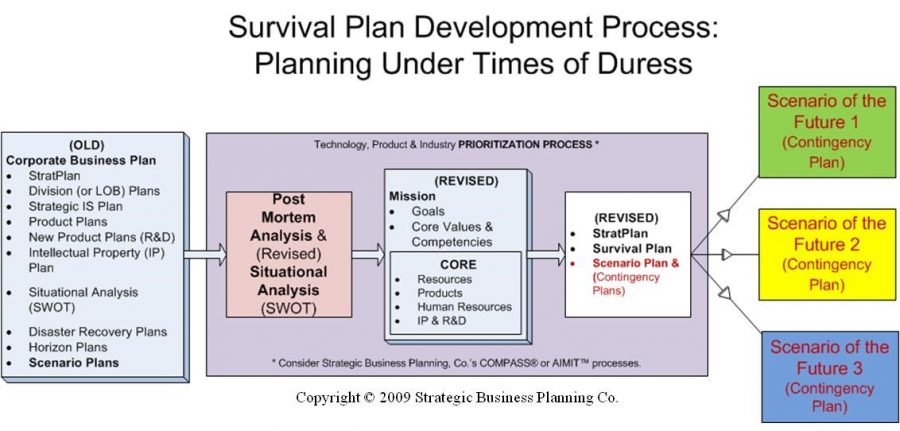

So, this brings us back to scenario planning. The advantage of scenario planning is that you can build Contingency or Disaster Recovery Plans based on various scenarios. Serious and protracted supply chain disruptions, no matter the cause, seem like logical scenarios.

Right now might be a good time to dust off the Contingency Plans and see if anything needs to be updated, or executed, because of the recent events.

In the 2018 Guide by Hall and Hinkelman, the scenario chapter discusses Y2K as the greatest scenario planning exercise in history. Read about the Y2K Scenario from that chapter (pp. 161-163). Remember that right now many companies are executing their contingency plans related to current events, many others are trying to develop them on the fly – kind of a fly-by-night approach to scenario planning.

<*This section below is reproduced here with permission of the authors.*>

The Great Scenario Planning Exercise, Y2K!

There were several major advantages to corporations’ planning – scenario planning really – that came out of the Year 2000 (Y2K) preparation process. Planners were forced to consider at least two views of the future: the official view where Y2K caused no interruptions, and the view of chaos where it caused massive interruptions (mainly because of sustained interrupts to the power grid). One of the interesting parts of this process is the spillover implication – legally, morally and brand-image-wise – of doing nothing in preparation and being wrong. The scenario planning processes associated with Y2K resulted in stronger business planning and improved disaster recovery plans (DRPs). It also helped with business continuity plans by building stronger relationships with critical business partners.

Many people would say that this is a bad example because Y2K was a bust. Actually, the major push to organize IT and transition from legacy systems has substantially contributed to increased productivity for several years after the turn of the century. Business productivity has been surprisingly low since about 2005. Two examples where the Y2K efforts proved to be well justified are Burger King and FPL.

Burger King Corporation, then a division of DIAGEO, worked very closely with franchisees and its most critical suppliers (beef, buns, fries and Coke) to make sure that there would be no interruption and that contingency plans would be in place for likely situation related to Y2K. By far the biggest risk, and the most attention to contingency planning, went to AmeriServe. AmeriServe was the number one supplier to the Burger King system that had bought out the number two supplier and now represented three-fourths of the global supply chain. Three weeks into the new Millennium, AmeriServe declared bankruptcy! The contingency plans related to distribution had fortunately been dramatically improved during 1999 and continuity actions were immediately executed. Although it had nothing to do with Y2K, per say, much if not the entire contingency plan could be used for any distributor outage.

An adjunct to the Y2K story relates to power. Once organizations got past addressing their critical IT systems, the biggest wild card was power outages. No assurances came from the power companies until just months before the turn of the millennium, and even then, not much was given in the way of formal assurances. Of course, that was too late for a big organization with brand and food safety issues to have avoided the major contingency planning efforts.

Most people did not realize how fragile and antiquated the entire power grid was until the huge Ohio, New England and Canadian black out August 14, 2003 (CNN). A cascading blackout disabled the Niagara-Mohawk power grid leaving the Ottawa, Cleveland, Detroit and New York City region without power. There was a shutdown of 21 power plants within a three-minute period because, with the grid down, there was no place to send the power. Because of a lack of adequate time-stamp information, for several days Canada was believed to be the initiator of the outage, not Iowa.

There have been similar blackouts in Europe. That Y2K could have resulted in massive outages may not have been so far-fetched after all. Ask someone who was stuck in an elevator for eight hours if the preparations for long-term power outages could have been better.

Hall (2009) developed a survival planning approach that would help an organization survive during times of extreme uncertainty, like the Great Recession. Of course, the process is far ahead if the organization already has a good strategic plan (StratPlan) that includes contingency and scenario planning.

References

Hall, E. (2009). Strategic planning in times of extreme uncertainty. In C. A. Lentz (Ed.), The refractive thinker: Vol. 1. An anthology of higher learning (1st ed., pp. 41-58). Las Vegas, NV: The Lentz Leadership Institute. (www.RefractiveThinker.com)

Hall, E. B. & Hinkelman, R. M. (2018). Perpetual Innovation™: A guide to strategic planning, patent commercialization and enduring competitive advantage, Version 4.0. Morrisville, NC: LuLu Press. ISBN: 978-1-387-31010-4 Retrieved from: http://www.lulu.com/spotlight/SBPlan

Here are Scenarios and sources of the injustice in the Criminal Justice system in the USA.

The US has the most people incarcerated of any country in the world… Even though we only have 4.3% of the world’s population, we have more inmates — 2.2 million — than China (1.5m) and India (0.3m), combined (36.4% of world population)! We have 23% of China’s population but 40% more incarcerated. We have almost 1% of our population (0.737%) incarcerated! We have 6 times higher incarceration rate than China, 12 times higher that Japan, and 24 times the rates in India and Nigeria. That’s right, an American has a 1,200% greater chance of being incarcerated than a Japanese citizen. We have even a 20% higher incarceration rate than Russia with 0.615% of their population in (Siberian) prisons and jails.

I know what you’re thinking, Americans must be more criminally inclined than any other country in the universe. And, no, it is not those *bleeping* Mexicans. The evidence shows that the Mexicans (legal or otherwise) cause less crimes than the typical “American”, plus crimes involving illegal Mexicans are far more likely to go unreported.

So now, I’m at a loss. Where did the criminal genes come from? You can’t really blame the American Indians.

Some of the ugly mechanisms and profits in the prison system are summarized nicely here in ATTN by Ashley Nicole Black, Who Profits from Prisons (Feb, 2015).

“There are currently [2.2 million] American in prisons. This number has grown by 500 percent in the past 30 years. While the United States has only [4.3] percent of the world’s population, it holds 25 percent of the world’s total prisoners. In 2012, one in every 108 adults was in prison or in jail, and one in 28 children in the U.S. had a parent behind bars.”

For years I heard stats that half of the people in prison in the USA were for non-violent (no weapon) drug offenses. That’s insane. It seems like the wrong people are institutionalized here. With the legalization of marijuana in many states these incarceration rates should be reducing (improving). July 2018 shows 46% of US inmates are for drug offenses: https://www.bop.gov/about/statistics/statistics_inmate_offenses.jsp

Okay, so what does that have to do with scenarios and scenario planning? What would be some of the scenarios that might lead to something more sane in terms of our incarceration rates. One approach would be to focus on those deflection points that might result in a lower level of criminals (criminal activity). Just one would be a new approach related to the prohibition of marijuana. As we learned from alcohol, prohibition doesn’t work. But there are several other ways to provide a mechanism for less criminal activity and/or less people incarcerated and/or less people incarcerated for so long. We’ll talk about two of our favorites at a later time: education and community engagement/involvement. (The Broken Window concept of fixing up the community and more local engagement is very intriguing. See article by Eric Klinenberg here.)

The big thing that escalated US incarceration rates was a get-tough-on-crime movement that began during the Nixon “I’m-not-a-crook” era. Part of this was obviously to have some tools to go after the hippies and the protesters. Tough on crime with mandatory sentences, lots of drug laws, and 3-strike laws came into being. Not to be outdone, as the toughest on crime, the 3-strikes moved to 2-strikes to, essentially 1-stike. As we filled up the prisons, we had to build more.

One current trend that should increase incarceration is the current epidemic of opioid-ish drug overdoses. Most forces, however, seem to be pushing toward reductions in incarceration.

Various scenarios should lead to a significant reduction in incarceration rates. The resulting scenario of low incarceration should have several ramifications. If you are in the business of incarceration, then business should – ideally – get worse and worse. Geo and Corrections Corp of America (now CoreCivic) should expect their business to drop off precipitously. Plus, there seem to be several movements away from private (or publicly traded) companies back toward government run prisons because private has been shown to be less effective — even if cheaper on the inmate-year bases.

Here’s a discussion of the business of incarceration. Note that the “costs” of incarceration are far, far more than the $50,000+/- it costs per year per inmate. Plus, having more people as productive members of society has them working (income and GDP) and paying taxes, not a dead weight on society.

Do you think that the relaxation of marijuana laws might be a “Sign Post” (in scenario terms) that indicates a rapid drop in prison population? Also, super full employment, might be a solution all by itself. People, especially kids, who can get jobs and do something more productive, may be less inclined to get into drugs and mischief? There’s no reason why the Sign Post need to be only one, or even two signs. In fact, the crime system is just a sub-system of an economy. Multiple reinforcing systems can be really powerful.

If we do take other approaches to the incarceration system, what would those approaches be? And who (what businesses/industries) would benefit most?

What do you think? Is it time to get out of the criminal (in)justice system?

Resources

Half of the world’s incarcerated are in the US, China and Russia: http://news.bbc.co.uk/2/shared/spl/hi/uk/06/prisons/html/nn2page1.stm

Incarceration Rates: https://www.prisonpolicy.org/global/2018.html

US Against the world: https://www.statista.com/statistics/300986/incarceration-rates-in-oecd-countries/

New Yorker Article in Sept 2016 by Eric Markowitz, Making Profits on the Captive Prison Market.

How for-profit prisons have become the biggest lobby no one is talking about, by Michael Cohen in 2015.

Follow the money, in 2017, with a great infographic as to where all the prison moneys go.

There are several scenarios that jump out at you.

Hall and Knab (2012) outlined 11 or so items that were non-sustainable trends/practices that appeared to have compounding and accelerating forces. Those items get worse in a wicked bad way when they go unattended. Therefore, they are wonderful areas to generate scenarios. Here’s a few: US Debt deficit, US Trade deficit, the interest rate bomb, Life-style bomb, the compounding healthcare costs escalation bomb, the fossil fuel energy bust (peak) or bomb (massive government intervention), and the single problem vs integrated problem dilemma.

There are a few more that jump out in current months. The news and its reliability keeps getting worse. Fake news has become a steady fact. And miss information is well ahead of good, reliable journalism. The SustainZine blog wonders if this is not the time for WikiTribune approach to journalism. There’s many ways that the broken news system can go: from really bad, to even worse; or to use the leverage of computers, networking and crowds to purify it (if only a little). There’s probably no situation where the regular media world of news, near-news, or fake-news will stay the same as it has evolved in 2016 and 2017.

Another scenario rich environment is global warming, renewables and fossil fuels. While companies have been steadily getting on-board with the idea that they need to start aiming for sustainable business models, the politics has gotten into a kink. While China and India have made a massive about-face on the Paris conference and actions toward thwarting off Global Warming; the US under Trump is about to go the other way. With the tug-of-war from the deniers and the greenies, it seem likely that something big is about to give. One side will lose and get pulled in, the other side will win, or the rope will snap. If the greenies are right, the world warming will get very bad, very quickly… that’s ugly, but interesting. There will be a lot of oil and gas and coal that will be rendered useless because it can’t be (shouldn’t be) burned. If the deniers are right, the oil, gas and coal companies have many more decades to enjoy unfettered combustion. And Ha Ha to those foolish fear-mongers in Paris.

Inflation. The US, with $20T in debt on a $19T sized economy based on GDP, currently pays about 9% of all government revenues in interest. (Revenue seems like the wrong word to use for government inflows.) That is at near zero interest rates. When inflation goes up, the fed will end up paying much, even all of the revenues toward servicing the debt. At just over 10% interest, the Fed would pay almost all revenues toward servicing the debt. Nothing for Medicare, SSI, or Military. Oh, and it has been about 8 years, since we have had a good recession, which happens on average every 7 years.

Gold is an interesting scenario. Lot’s of people thought it would shoot off into space, for many reasons. The US Dollar is strong because it is the best shanty in the slum neighborhood, so gold should look good, relatively. But maybe a crypto currency like Bitcoin, massive federal government interventions around the world –and other factors — have taken the luster off of what some consider the most secure investment in the world?

What other scenarios do you see looming?

What’s the best way for a business to prepare for some of these scenarios that loom large?

References

Hall, E., & Knab, E.F. (2012, July). Social irresponsibility provides opportunity for the win-win-win of Sustainable Leadership. In C. A. Lentz (Ed.), The Refractive Thinker: Vol. 7. Social responsibility (pp. 197-220). Las Vegas, NV: The Lentz Leadership Institute.

(Available from www.RefractiveThinker.com, ISBN: 978-0-9840054-2-0)

Most of the hunters (academic researchers) searching for consensus in their Delphi research, are new to the sport. They believe that they must bag really big game or come home empty handed. But we don’t agree. In fact, once you have had a chance to experience Delphi hunting once or twice, your perception of the game changes.

Consensus is a BIG dilemma within Delphi research. However, it is generally an unnecessary consumer of time and energy. The original Delphi Technique used by the RAND Corporation wanted to aim for consensus in many cases. That is, the U.S. government could either enter an nuclear arms race or not; there really was no middle ground. Consequently, it was counterproductive to build a technique that could not reach consensus. It became binary: reach consensus and a plan could be recommended to the president; no consensus, and this too was useful, but less helpful, to inform the president. (The knowledge that the experts could not come up with a clear path forward, when exerting a structured assessment process, is also very good to know.)

Consensus. The consensus process – getting teams of experts to think through complex problems and come up with the best solutions – is critical to effective teamwork and to the Delphi process. In most cases, however, it is not necessary – or even desirable – to come up with the one and only best solution. So long as there is no confusion about the facts and the issues, forcing a consensus when there is none is counter-productive (Fink, Kosecoff, Chassin & Brook, 1984; Hall, 2009, pp. 20-21).

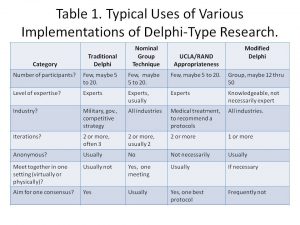

Table 1 shows the general characteristics of various types of nominal group study techniques (Hall & Jordan, 2013, p. 106). Note that the so called traditional Delphi Technique and the UCLA-RAND appropriateness approaches aim for consensus. The so call Modified Delphi might not search for consensus and might not utilize experts. Researchers use the UCLA-RAND approach extensively to look for the best medial treatment protocol when only limited data is available, relying heavily on the expertise of the doctors involved to suggest – sometimes based on their best and informed guess – what protocol might work best. The doctors can only recommend one protocol. Consensus is needed here.

(Table reprinted with permission Hall and Jordan (2013), p. 106).

But consensus is rarely needed, although it is usually found, to some degree, in business research, and even in most academic research. For example, the most important factors may be best business practices. Of the total list of 10 to 30 factors, few are MOST important. Often, the second round of Delphi aims to prioritize those qualitative factors identified in round 1. There factors are usually natural separation points between the most important (e.g. 4.5 out of 5), those that are medium important (3 out of 5), and the low importance factors.

Those researchers who are fixated on consensus might spend time, maybe a lot of time, trying to find that often elusive component called consensus. There are usually varying levels of agreement. Five doctors might agree on one single best protocol, but 10 probably won’t, unanimously. Interestingly, as the number of participants increase, the ability to talk statistically significantly about the results increases; however, the likelihood of pure, 100% consensus, diminishes. For example, a very small study of five doctors reaches unanimous consensus; but when it is repeated with 30 doctors, there is only 87% agreement. Obviously, one would prefer the quantitative and statistically significant results from the second study. (Usually you are forecasting with Delphi; 100% agreement implies a degree of certainty in an uncertain future, essentially this can easily result in a misapplication of a very useful planning/research tool.)

This brings us to qualitative Delphi vs. a more quantitative, mixed-method, Delphi. Usually Delphi is considered QUAL for several reasons. It works with a small number of informed, or expert, panelists. It usually gathers qualitative information in round 1. However, the qualitative responses are prioritized and/or ranked and/or correlated in round 2, round 3, etc. If a larger sample of participants results in 30 or more respondents in round 2, then the study probably should be upgraded from a purely qualitative study to mixed-method. That is, if the level of quantitative information gathered in round 2 is sufficient, statistical analysis can be meaningfully applied. Then you would look for statistical results (central tendency, dispersion, and maybe even correlation). You will find a confidence interval for all of your factors, those that are very important (say 8 or higher out of 10, +/- 1.5) and those that aren’t important. In this way, you could find those factors that are both important and statistically more important than other factors: a great time to declare a “consensus” victory.

TIP: Consider using more detailed scales. As 5-point Likert-type scale will not provide the same statistical detail as a 7-point, 10-point or maybe even a ratio 100% scale if it makes sense.

Subsequently, in the big game hunt for consensus, most hunters continue to look for the long-extinct woolly mammoth. Maybe they should “modify” their Delphi game for an easier search for success instead . . .

What do you think?

References

Hall, E. (2009). The Delphi primer: Doing real-world or academic research using a mixed-method approach. In C. A. Lentz (Ed.), The refractive thinker: Vol. 2: Research Methodology, (pp. 3-27). Las Vegas, NV: The Refractive Thinker® Press. Retrieved from: http://www.RefractiveThinker.com/

Hall, E. B., & Jordan, E. A. (2013). Strategic and scenario planning using Delphi: Long-term and rapid planning utilizing the genius of crowds. In C. A. Lentz (Ed.), The refractive thinker: Vol. II. Research methodology (3rd ed.). (pp. 103-123) Las Vegas, NV: The Refractive Thinker® Press.

We are rapidly moving to one of the most disruptive innovations in modern computing. Truly mobile computing. The Driver-less car. These cars are going to have a lot of computing power on-board. They will need to be self contained, after all, if going through a tunnel or parking lot. But they will be amassing massive amounts of data as well, 4 terabytes of data per day for the average self-driving car. Wow. And current mobile data plans start to charge you or throttle you after about 10MB of data usage per month.

Read about this in a great WSJ article by Greenwald on March 13. It focuses on the companies in play and the new bid by Intel to buy MobilEye for $15.3B, the look-around and self driving technology going into GM, VW & Honda cars. The 34% premium shows how important this tech is to the slumbering Tel Giant.

What’s all the fuss about driver-less cars? How does going Driver-Less impact the future: what are potential interruptions, problems and/or discontinuities? How could this technology alter the strategic plans for many market leaders?

It seems likely that the majority of Americans will reject using/supporting driver-less vehicles… for a while. It removes individual control, emasculates the sense of manly power while removing decision making. One cannot demonstrate a charged-up ego to a potential partner when a computer and sensors are driving the speed limit behind a school bus. A driver can suddenly opt for a shortcut or a scenic route that he knows by heart. Not so the driver-less vehicle. However, Tesla drivers have already been reprimanded, for letting the car do too much of the driving, under too many unusual circumstances.

Just a few things to think further about: Long-Haul Trucking and Enabling Technologies.

Long-haul trucking. There is a major shortage of truck drivers. Labor rules don’t let drivers do long hauls without breaks or rest. So long haul driving often uses two drivers for the same truck that is going coast to coast. If the truck needs to stop and drop along the way, however, then a person on-board, might still be necessary. However drops and pickups usually have someone there at the warehouse who can assist. How will the truck fuel itself up at the Flying-J truck stops? If we can fuel up fighter jets in mid air, we can figure out how to fuel up a driver-less truck. One obvious solutions – or not so obvious, if you’re not in the habit of longer-term and sustainable thinking – is to move to electric trucks and a charging pad. Simply drive the electric truck over a rapid-charging pad. Rapid-charge technology is already generally available using current technologies (especially with minor improvements in batteries and charging).

Enabling Technology Units (ETUs). The MobilEye-types of technology apply to lots and lots of other situations, such as trucks, farm tractors, forklifts, etc. Much of the technology being developed for the driver-less car is what Hall & Hinkelman (2013) refer to as Enabling Technology Units (ETUs) in their Guide book to Patent Commercialization. The base technologies have many and broad based applications beyond the obvious direct market application. It is the Internet of things, when the “things” are mobile, or when the “things” around it are mobile, or both. This is an interesting future of mobile computing.

References

Hall, E. B. & Hinkelman, R. M. (2013). Perpetual Innovation™: A guide to strategic planning, patent commercialization and enduring competitive advantage, Version 2.0. Morrisville, NC: LuLu Press. ISBN: 978-1-304-11687-1 Retrieved from: http://www.lulu.com/spotlight/SBPlan

It’s been about 10 years since the Great Recession of 2007-2008. (It formally started in December of 2007.) A 2009 McKinsey study showed that CEOs wished that they had done more scenario planning that would have made them more flexible and resilient through the great recession. In a 2011 article, Hall (2011) discusses the genius of crowds and group planning – especially scenario planning.

The Hall article spent a lot of time assessing group collaboration, especially utilizing the power available via the Internet. Wikipedia is one of the greatest collaboration – and most successful – tools of all time. It is a non-profit that invokes millions of volunteers daily to add content and regulate the quality of the facts. In this day of faus news, Wikipedia is a stable island in the turbulent ocean of content. Anyone who has corrections to make to any page (called article) is encouraged to do so. However, the corrections need to fact-based and source rich. Unlike a typical wiki, where anything goes, the quality of content is very tightly controlled. As new information and research comes out on a topic, Wikipedia articles usually reflect those changes quickly and accurately. Bogus information usually doesn’t make it in, and bias writing is usually flagged. Sources are requested when an unsubstantiated fact is presented.

Okay, that’s one of the best ways to use crowds. People with an active interest – and maybe even a high level of expertise – update the content. But what happens when the crowd is a group of laypeople. Jay Leno made an entire career from the “wisdom” of people on the street when he was out Jay Walking. The lack of general knowledge in many areas is staggering. Info about the latest scandal or gossip by celebs, on the other hand, might be really well circulated. So how can you gather information from a crowd of people where the crowd may be generally wrong?

It turns out that researchers at MIT and Princeton have figured out how to use statistics to figure out when the crowd is right and when the informed minority is much more accurate (Prelec, Seung & McCoy, 2017). (See a Daniel Akst overview WSJ article here.) Let’s say you are asking a lot of people a question in which the general crowd is misinformed. The answer, on average, will be wrong. There might be a select few in the crowd who really do know the answer, but their voices are downed out, statistically speaking. These researchers took a very clever approach; they ask a follow-on question about what everyone else will answer. The people who really know will often have a very accurate idea of how wrong the crowd will be. So the questions with big disparities can be identified and you can give credit to the informed few while ignoring the loud noise from the crowd.

Very cool. That’s how you can squeeze out knowledge and wisdom from a noisy crowd of less-than-informed people.

The question begs to be asked, however: Why not simply ask the respondents how certain they are? Or, maybe, ask the people of Pennsylvania what their state capital is, not the other 49 states who will generally get it wrong. Maybe even put some money on it to add a little incentive for true positives combined with costly incorrect answers such that only the crazy or the informed will “bet the farm” on answers where they are not absolutely positive?

But then, that too is another study.

Now, to return to scenario planning. Usually with scenario planning, you would have people that are already well informed. However, broad problems have different silos of expertise. Maybe a degree of comfort or confidence would be possible in the process of scenario creation. Areas where a specific participant feels more confident might get more weight than other areas where their confidence is lower. Hmm… Sounds like something that could be done very well with Delphi, provided there were well informed people to poll.

Note scenarios are different from probabilities… Often scenarios are not high probabilities… You are usually looking at possible scenarios that are viable… The “base case” scenario is what goes into the business plan so that may be the 50% scenario; but all the other scenarios are everything else. The base case is only really likely to occur if nothing major changes in the macro and the micro economic world. Changes always happen, but the question is, does the change “signal” that the bus has left the freeway, and now new scenario(s) are at play.

The average recession occurs every 7 years into a recovery. We are about 10 years into recovery from the Great Recession. Of course, many of the Trump factors could be massively disrupting. Not to name them all, but on the most positive case, a 4% to 5% economic growth in the USA, should be a scenario that every business should be considering. (A strengthening US and world economy may, or may not, be directly caused by Trump.) The nice thing about having sound scenario planning, as new “triggers” arise, they may (should) lead directly into existing scenarios.

Having no scenario planning in your business plan… now that seems like a very bad plan.

Reference

Hall, E. (2009). The Delphi primer: Doing real-world or academic research using a mixed-method approach. In C. A. Lentz (Ed.), The refractive thinker: Vol. 2. Research methodology (2nd ed., pp. 3-28). Las Vegas, NV: The Lentz Leadership Institute. (www.RefractiveThinker.com)

Hall, E. (2010). Innovation out of turbulence: Scenario and survival plans that utilizes groups and the wisdom of crowds. In C. A. Lentz (Ed.), The refractive thinker: Vol. 5. Strategy in innovation (5th ed., pp. 1-30). Las Vegas, NV: The Lentz Leadership Institute. (www.RefractiveThinker.com)

Prelec, D., Seung, H. S., & McCoy, J. (2017, January 26). A solution to the single-question crowd wisdom problem. Nature. 541(7638), 532-535. 10.1038/nature21054 Retrieved from: http://www.nature.com/nature/journal/v541/n7638/full/nature21054.html

Dr Dave Schrader recently (December 2016) completed a very cool dissertation pertaining to the IRS and their (in)ability to assess tax preparers’ competency, and their (in)ability to test the preparers’ preparedness. {Sure, that’s easy for you to say!}

Over that last few years, the IRS has been charged with determining Tax Preparers’ competency. (Not the CPAs, mind you, but the millions of — shall we say — undocumented tax preparers.) The problem was that the IRS had not really determined what the preparers should know, before trying to test that they knew it.

Just as the IRS was starting to launch a “testing” of competencies, the civil courts forced Congress to pull the rug out. Another year or so has passed since a volunteer compliance program is in place… Still no uniform requirements as to what those preparers should know in order to be prepared for the tests. But most importantly, now it’s not just tests, even if they start up again. With the change in Federal Law governing competency, tax preparers must be competent every single time they sign their name to a tax return. No matter how complicated!

What could go wrong with this? ! 🙂

So, Dave’s challenge was to do a dissertation into this murky quagmire. He found out the requirements, what they should know (generally), how they should learn it, and how competency should be assessed. As an afterthought, he tied this all into learning theory. To frame the skill identification, development, and assessment model, he tied the process into a construct for an effective total learning system.

If the dissertation sounds busy, that’s why. Lots of tables and charts to guide the reader through the mundane and the details.

Anyone teaching accounting should be interested in this dissertation. The management within the IRS should be calling Dr Dave in to assist with their Preparer Preparedness Program!.

From an Human Resources (HR) or management perspective, this is a very cool study. First is the skills needed. It works backwards from the skills needed to how and where to develop those skills: education, on-the-job training, or job experience. This is most of the way to “HR backcasting” for developing the skills needed for future jobs. Although backcasting is often used pertaining to economic development, the method, by necessity, must consider skills of the workers for those future jobs.

Can’t wait for the articles that will come out of this dissertation by this accountant (Accredited Accountant, Tax Preparer, and Advisor), teacher and newly minted Doctor.

References

Schrader, David M. (2016). Modified Delphi investigation of core competencies for tax preparers. D.B.A. dissertation, University of Phoenix, Arizona. Dissertations & Theses @ University of Phoenix database.