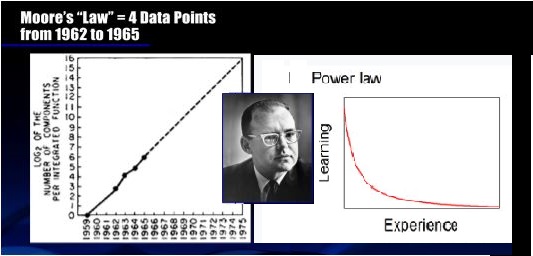

Moore’s law may be a more general phenomenon, namely learning curve. But similar tools may come to the same conclusion. Disruptive Technologies are coming to the silicone chip world.

Search results: "moore"

On April 4, 2019 the DC chapter of the IEEE Computer Society Chapter on Quantum Computing (co-sponsored by Nanotechnology Council Chapter) met to see a presentation by and IBM researcher named Dr. Elena Yndurain on the subject of recent efforts by that company in the realm of quantum computing. I was fortunate enough to be […]

Do you know what Gordon Moore actually said? In 1965 Gordon Moore observed that if you graphed in the increase of transistors on a planar semiconductor device using semi-log paper, it would describe a straight line. This observation ultimately became known as Moore’s law. The “l” is lower case in the academic literature because the […]

Last week the world’s biggest Electric Vehicle (EV) battery company made a big opening splash on its IPO. CATL is a Chinese company that IPOed with a massive 44% pop on open. The company offered up only 10% of the shares in the IPO, valuing the company at more than $12B. China has limits on […]

The researchers over at Strategic Business Planning Company have been contemplating scenarios that lead to the demise of oil. The first part of the scenario is beyond obvious. Oil (and coal) are non-renewable resources; they are not sustainable; burning fossil fuels will stop — eventually. It might cease ungracefully, and here are a few driving […]

Previously, we talked about the Tic-Toc of computing at Intel, and how Gordan’s law (Moore’s law) of computing – 18 months to double speed (and halve price) – is starting to hit a brick wall (Outa Time, the tic-toc of Intel and modern computing). Breaking through 14 nanometer barrier is a physical limitation inherent in […]

Ed Jordan’s dissertation research looked at the future of computing. He was inspired by the thought that Gordon’s law (Moore’s law) of computing — 18 months to double speed (and halve price) — was about to break down because of the limitations of silicon chips as the go below the 14 manometer level. Since Intel […]